The Nginx 504 gateway timeout error is as frustrating as it is vague. It disrupts the availability of your web applications and can even cause search engines to de-index your site if it's not resolved quickly.

That said, there's no need to panic. Once you have a clear process in place, Nginx 504 errors are generally not too difficult to diagnose and resolve. We're going to help you get there by covering everything you need to know about this error, including:

- What is an Nginx 504 gateway timeout and what causes it?

- How to increase the default Nginx timeout

- Diagnosing Nginx gateway errors

- Testing for and resolving network issues

- Checking for general errors on your upstream server

- Diagnosing and resolving upstream server performance

- How to implement Nginx caching to improve response times

- Upgrading your server

- Closing words and advice

What is an Nginx 504 gateway timeout and what causes it?

A “504 Gateway Timeout (Nginx)” error typically means that the Nginx server, acting as a gateway or proxy, didn't receive a timely response from an upstream server in the time it's configured to wait.

This most commonly occurs in a reverse proxy or load balancing setup, where multiple servers are interracting with each other. It can occur for a number of reasons, including misconfiguration, high load or other disruption on the upstream server, and network connectivity issues between the servers.

Breaking down the request process step-by-step might aid in the understanding of this issue:

- The client (usually a browser) sends a request to Nginx

- Nginx forwards this request to another server (known as an upstream server), which is usually another web server, an application server, database, etc. that you control.

- Nginx waits for a response from the server so that it can forward it to the client and, after a bit more back-and-forth, serve the client the content it requested.

- If Nginx is waiting for a response for too long, it assumes there is an issue and terminates its waiting to save resources. It presents the client with the 504 Nginx gateway timeout error.

Most fixes rely on you having access to the upstream server, but there are some tweaks you can make to your Nginx configuration that might help, too.

Hosting provider doesn't let you tweak your Nginx server? Join BitLaunch to gain full access to your server files on fast, realiable hardware.

Diagnosing Nginx gateway errors

The first step in fixing your Nginx timeout issue is to find the root cause. A good starting point is noting how often this error occurs and under which circumstances. Ask yourself:

- Is the upstream server reachable?

- Is this a persistent issue or intermittent?

- Did it occur immediately on setup, or has it been functioning normally for some time?

- What changes have you made to your upstream and downstream servers lately?

- Is the issue happening for all requests or only for specific URLs / features?

- Can the issue be tied to specific clients (IP ranges, geographies)?

Answering these questions can help you to decide which of the steps below to follow. For example, intermittent issue points more towards problems such as your server not having enough resources, an unreliable server provider, poor database optimization, etc. Issues directly after setup point towards a misconfiguration, while a persistent lack of response could indicate a network issue, etc.

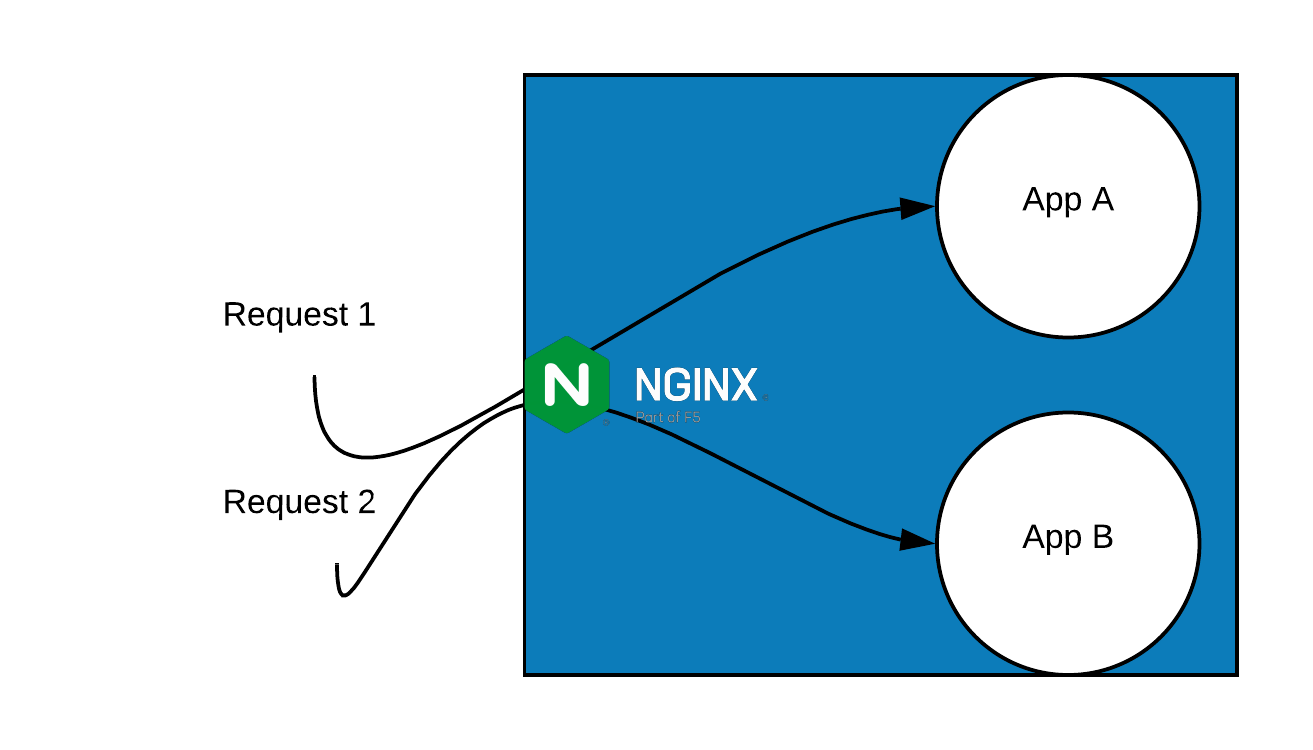

Going forward, we'll be using a BitLaunch Ubuntu VPS server configured as an Nginx reverse proxy as an example, using a domain name of domain.com. You can see a rough diagram of how the infrastructure is set up below. The Nginx logo represents our reverse proxy server, which handles requests for two upstream applications.

How to increase the default Nginx request timeout

If your upstream server is slow, increasing the amount of time your Nginx gateway/proxy is willing to wait will stop it from occurring. However, it's worth noting that unless your Nginx timeout was configured with an unreasonably low value, this is more of a mitigation than a fix. An increased timeout value means that your users will have to wait longer before a page is served to them, resulting in a degraded experience.

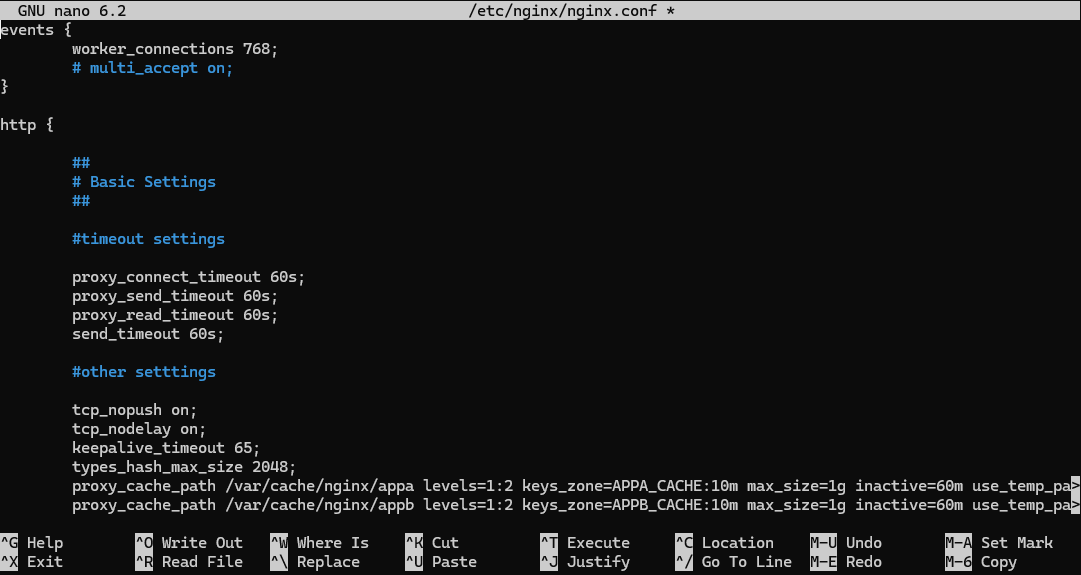

You can change the default Nginx request timeout values in your Nginx configuration file. We'll show you how to change them step-by-step in the Linux command-line below:

- Open your Nginx config file, which is usually located in

/etc/nginx/nginx.conf:

sudo nano /etc/nginx/nginx.conf2. In your configuration file, scroll until you find the line that starts http {. Underneath it, paste the following:

proxy_connect_timeout 60s;

proxy_send_timeout 60s;

proxy_read_timeout 60s;

send_timeout 60s;3. Press Ctrl + O, followed by Ctrl + X to save your changes and exit.

4. Test and reload your Nginx configuration to apply the changes:

nginx -t

sudo systemctl reload nginxRemember, this is a temporary mitigation that does not fix the underlying cause. It's not acceptable for a user to have to wait up to sixty seconds before their content is served. As a next step, you should at least test and monitor response times from the upstream server to ensure they remain reasonable and do not increase further.

Testing for and resolving network issues

Before you dive into other fixes, it's worth checking if your upstream server is reachable and the latency along the route. From the client that's experiencing the issue, run a traceroute to the upstream server using the following command:

traceroute your.server.ipOutput:

1 11 ms 3 ms 3 ms 192.168.10.1

2 * * * Request timed out.

3 20 ms 17 ms 28 ms 62.113.254.62

4 * * * Request timed out.

5 27 ms 31 ms 17 ms ae1-b1-20751.ip.telenor.se [213.204.123.21]

6 37 ms 17 ms 18 ms ti3218b400-ae14-10.ti.telenor.net [148.122.11.33]

7 * * * Request timed out.

8 60 ms 39 ms 42 ms ti9002b400-ae5-0.ti.telenor.net [142.172.102.81]

9 * * * Request timed out.

10 * * * Request timed out.

11 * * * Request timed out.

12 58 ms 54 ms 63 ms 5.254.123.34

13 37 ms 57 ms 58 ms 162.252.198.231 <--- destination serverOn a Windows machine, use tracert instead. Take note of timeouts or any particularly high latency values along the route. If you're running a reverse proxy, do the same from the server hosting the proxy to its upstream server.

If you cannot connect to the upstream server at all, check that it is functioning correctly and that you have properly configured your firewall. As a reminder, your firewall needs to allow traffic on port 80:

sudo ufw allow 80Ensure the ports required for any other applications or services you are running are open, too.

If initial tests show issues with response times, you can measure them in more detail. Here are some ideas:

1. Test each phase from the client with curl:

curl -sS -o /dev/null -w 'dns=%{time_namelookup} tcp=%{time_connect} tls=%{time_appconnect} \

ttfb=%{time_starttransfer} total=%{time_total} size=%{size_download} code=%{http_code}\n' \

http://appa.domain.com/

2. Log how long upstreams take on your Nginx reverse proxy server with this config modification:

log_format timed '$remote_addr "$request" '

'status=$status rt=$request_time '

'urt=$upstream_response_time '

'uct=$upstream_connect_time '

'uht=$upstream_header_time';

access_log /var/log/nginx/access.timed.log timed;3. Use hey to load test latency:

hey -n 200 -c 10 -H "Cache-Control: no-cache" http://appb.domain.com/Troubleshooting DNS issues

Problems with domain name resolution can lead to Nginx 504 errors. This is particularly common if you have just created your site or migrated it to a new server or host, and the DNS records have not fully propagated.

The most convenient way to test for DNS propagation worldwide is by plugging the URL into DNSMap. We also recommend checking your DNS records for any issues. BitLaunch customers can manage their DNS records directly from their control panel by selecting the "DNS" tab at the top of the screen.

It's also possible the DNS issue is client-side. You may just need to flush your DNS cache with a command such as:

ipconfig /flushdnsChanging your DNS provider in your settings could also fix the problem if your ISP is having issues.

Troubleshooting CDN problems

If you're using a content delivery network (CDN) such as Cloudflare, the problem could be on their end. The easiest way to check this is by temporarily disabling your CDN in its control panel or by disabling your CDN plugin if you're using WordPress.

If the error does not occur when the CDN is turned off, you should check your CDN settings and contact with your CDN provider or web host for further help with resolution. Most of the time, this is caused by an outage and they're already working on a fix.

Checking for general errors on your upstream server

Checking your server logs will help you to determine if the issue is caused by your server crashing or other general problems. First, we can check the Nginx logs on our reverse proxy server. This will help us to determine when and how often this error has occurred:

sudo tail -f /var/log/nginx/error.logOutput:

2025/10/20 11:43:58 [notice] 132638#132638: signal process started

2025/10/20 12:00:37 [notice] 132734#132734: signal process started

2025/10/20 12:00:51 [error] 132735#132735: *693 upstream timed out (110: Unknown error) while reading response header from upstream, client: 94.234.70.63, server: appb.domain.com, request: "GET / HTTP/1.1", upstream: "http://162.252.198.231:4000/", host: "appb.domain.com"In this example, it looks like the Nginx timeout error has occurred with appb.domain.com, but never with app A. Time to do some digging on the upstream app B server.

If you're using Nginx on the upstream server, you can check the logs there, too, using the same command:

sudo tail -f /var/log/nginx/error.logYou can also check var/log/nginx/access.log to see every request that Nginx handles, and info such as what the client asked for, how Nginx responded (status code), how long the request took (if configured), and so on. Look to see if there are any patterns.

You will also want to check your system logs. These include:

/var/log/syslog/var/log/messages/var/log/kern.logjournalctl

Examine of these, as well as logs from any applications/services you are running, and cross-reference any errors or warnings with the times your issue occurred and look into resolving them.

Diagnosing and resolving upstream server performance

A simple (and perhaps most common) reason for Nginx 504 errors is a lack of resources on the upstream server.

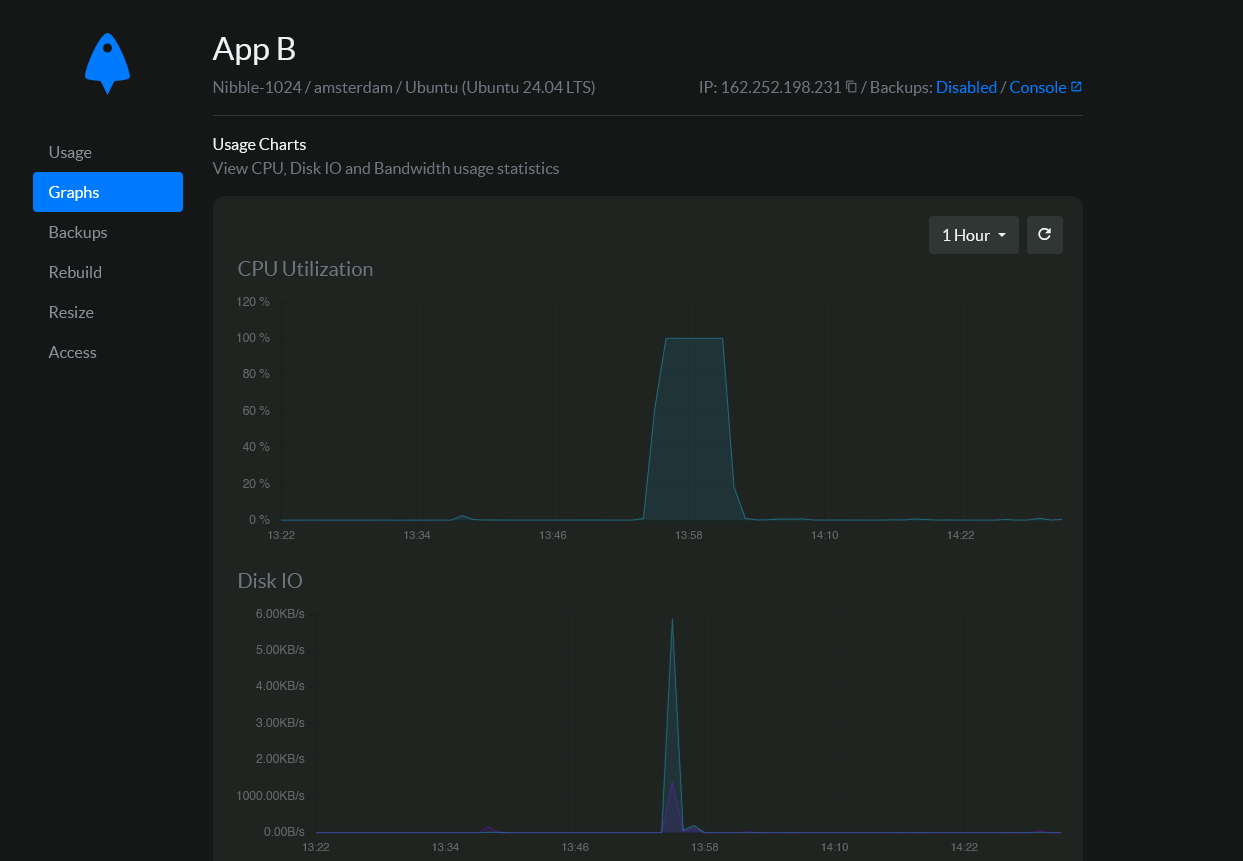

BitLaunch VPS customers can easily check their historical CPU, bandwidth, and disk utilization by clicking the server in their control panel and selecting "Graphs". If your host does not provide such tools, you can read our guide on Linux performance monitoring to check for yourself.

In this example, we can see a huge spike in CPU utilization at the same time the timeout error occurred. If we then check our syslog on server B, we can see that somebody started a stress test at the same time the error occurred. There's our culprit:

2025-10-20T12:00:00.389956+00:00 68f611c7f8a27d7b5a8418f3 stress-ng: invoked with 'stress-ng --matrix -1' by user 0 'root'

2025-10-20T12:00:12.391830+00:00 68f611c7f8a27d7b5a8418f3 stress-ng: system: '68f611c7f8a27d7b5a8418f3' Linux 6.8.0-31-generic #31-Ubuntu SMP PREEMPT_DYNAMIC Sat Apr 20 02:40:06 UTC 2024 x86_64

2025-10-20T12:02:29.391870+00:00 68f611c7f8a27d7b5a8418f3 stress-ng: memory (MB): total 961.66, free 208.88, shared 3.00, buffer 45.55, swap 0.00, free swap 0.00Of course, more realistically, performance issues might be caused by a certain application or process started, a large database query, a DDoS attack, etc.

You can scrutinize your network services using sudo ss -atpu

netstat -ntu|awk '{print $5}'|cut -d: -f1 -s|sort|uniq -c|sort -nk1 -rIf you see external IPs with 100+ connections, there's a high chance someone is attacking your server, and you should scrutinize further.

Suspect your server is being DDoS'd? Get free DDoS protection by hosting on BitLaunch.

How to implement Nginx caching to improve response times

Implementing caching on your reverse proxy server or load balancer should reduce response times on your upstream servers, which may stop it from timing out. Caching stores a copy of the website content to be served to visitors, rather than them having to fetch a fresh copy each time. This can improve performance and reduce load significantly if you have a high-trafficked site or app.

How to check whether Nginx is caching

You can quickly tell if an Nginx server is caching by using curl -I to inspect its response header:

curl -I appb.domain.comOutput:

Cache-Control: private, max-age=0, no-store, no-cache, must-revalidate, post-check=0, pre-check=0Naturally, if you see no-cache next to Cache-Control, caching is not enabled.

Implementing Nginx caching

You can quickly implement Nginx caching on your server block by following the steps below. We'll use our reverse proxy server and two apps from earlier as an example.

- First, let's make the directories where our cache files will be stored and give them the right permissions.

sudo mkdir -p /var/cache/nginx/appa /var/cache/nginx/appb

sudo chown -R www-data:www-data /var/cache/nginx- Open your

nginx.conffile using your favorite text editor. For example:

sudo nano /etc/nginx/nginx.conf2. Inside the html { block, define your cache zones. Something like this should work:

proxy_cache_path /var/cache/nginx/appa levels=1:2 keys_zone=APPA_CACHE:10m max_size=1g inactive=60m use_temp_path=off;

proxy_cache_path /var/cache/nginx/appb levels=1:2 keys_zone=APPB_CACHE:10m max_size=1g inactive=60m use_temp_path=off;We've defined separate zones for both app A and app B, giving them independent caches. Naturally. you can add paths for as many or as few apps as you like and modify the values of each to suit them. 10m uses 10MB of ram for indexing cache metadata, while 1g sets the maximum size of the cached data on disk to 1GB and the inactive=60 value removes items not accessed for 60 minutes. You can of course call the cache whatever makes sense — it doesn't have to be APPA_CACHE and APPB_CACHE.

3. Edit your virtual host. In this example, /etc/nginx/sites-available/reverse-proxy. In the server { block for each app, add cache zones and config, like so:

server {

listen 80;

server_name appa.domain.com;

location / {

proxy_pass http://appA;

proxy_cache APPA_CACHE;

proxy_cache_valid 200 301 302 10m;

proxy_cache_use_stale error timeout invalid_header updating;

proxy_ignore_headers Cache-Control Expires;

add_header X-Cache-Status $upstream_cache_status always;

}

}Remember to change APPA_CACHE and APPB_CACHE to whichever key zone you set in the previous step. You may also want to look into adding bypass rules to avoid caching user-specific pages. This will vary depending on your application or CMS, so we won't cover it here.

4. Test your nginx config using sudo nginx -t. If it is successful, reloading the configs with:

sudo systemctl reload nginx5. Check if caching is working again using curl and something like:

curl -sI http://appb.domain.com | grep -i "^x-cache-status"

curl -sI http://appb.domain.com | grep -i "^x-cache-status"The first test should output MISS and the second HIT.

Upgrading your server

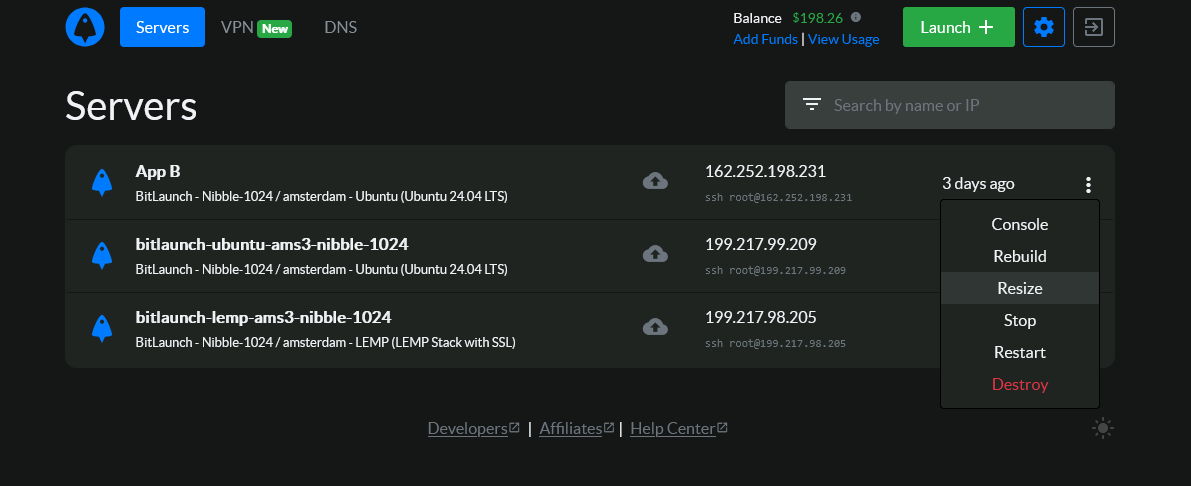

If your resource usage is persistently high, it's a sign that you either need to optimize your server or upgrade it.

504 errors are particularly common on shared hosting providers because your websites do not have dedicated resources. If another server in your "block" has high traffic, it could delay response times on your site or application, causing timeouts. Upgrading your shared hosting plan may help, but a more surefire way of ensuring this doesn't happen again is switching to a VPS provider like BitLaunch.

Bitlaunch customers get dedicated virtual resources that they don't need to share with anybody else. Additionally, if their traffic grows to more than their current server configuration can handle, it's simple to upgrade a server via their control panel.

If upgrading your server is not possible, splitting your load across multiple servers and optimizing your database should also help.

Closing words and advice

To summarize what we've learned, Nginx 504 errors are usually caused by one of three things:

- Network or performance issues with the upstream server, whether due to downtime, DNS resolution, a server crash, or a poorly configured firewall

- The Nginx timeout value being too low in your config file

- The upstream server(s) not having sufficient resources or requiring further optimization

While we can't provide you with a fix for every eventuality, Nginx timeouts can usually be resolved by:

- Identifying and resolving system, application, or web server errors on your upstream server

- Ensuring your firewall is properly configured to allow http/https traffic

- Increasing the Nginx timeout value in your config file if it has been set too low

- Scaling your upstream server to increase the number of resources available to it

BitLaunch customers can get further help troubleshooting this issue by talking to our expert support team via live chat.

Not a BitLaunch customer? Sign up today and talk to our support for some free credit.